National research data infrastructure: TUM involved in three consortia

More effective sharing of research data

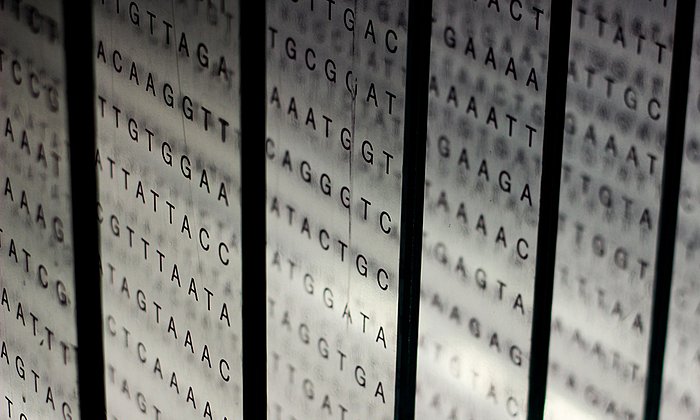

GHGA

Genome sequencing produces immense quantities of data. The aim of the German Human Genome-Phenome Archive (GHGA) is to make these data available to science without violating the personality rights of patients. The GHGA will focus initially on data collections pertaining to cancer and rare genetic disorders. Several nodes will be created across Germany through which researchers can access datasets. The sub-archive established under TUM's leadership at the Leibniz Supercomputing Center (LRZ) could provide access to pseudonymized datasets of Bavarian researchers on rare genetic disorders. The project is headed at TUM by Julien Gagneur, Professor of Computational Molecular Medicine, Juliane Winkelmann, Professor of Neurogenetics, and Thomas Meitinger, Professor of Human Genetics. In their part of the project, the scientists will address the challenges of databases on rare genetic disorders, among other topics. A special technical challenge with data involving these illnesses is how to make the data records comparable. The researchers will also develop the interface with which users can generate data output.

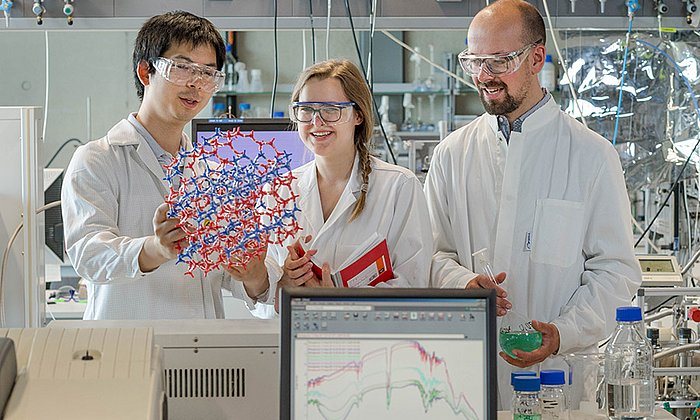

NFDI4Cat

A uniform format for making experimental research data on catalytic processes accessible is still lacking. The consortium NFDI4Cat aims to create such a standard. The members will develop an information structure to enable data from heterogeneous and homogeneous catalysis – fields using highly diverse working methods – but also photo, bio- and electrocatalysis, to be captured in a uniform format that supports interoperability. The data records need to be as detailed as possible to avoid information losses, but cannot be so large that data structures can no longer be analyzed. The goal of this project is not only to make experimental processes understandable, but also to enable predictions on catalytic processes from data records from widely differing fields via machine learning. Johannes Lercher, who holds the Chair for Chemical Technology II at TUM, is working with colleagues on the subproject "Heterogeneous Catalysis". With his team he is developing proposals on such issues as which kinetics data for the materials involved must be captured for this field of study. In cooperation with the other areas, the project will attempt to find the most suitable uniform format and the best solutions for making these data accessible to researchers across Germany.

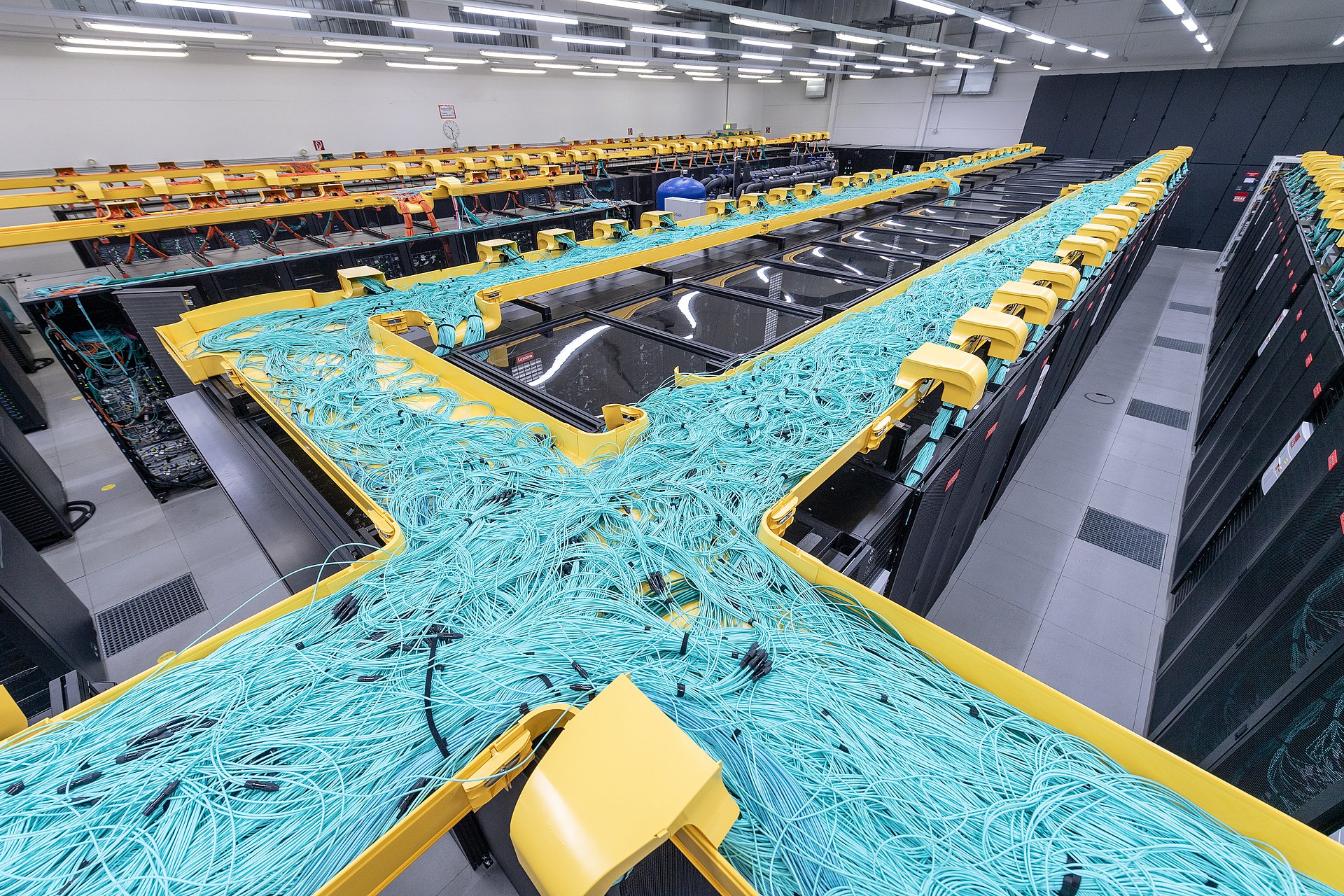

NFDI4ING

Large quantities of research data are produced in the engineering sciences as well. The vast range of topics results in wide diversity in data formats. The goal of the NFDI4ING consortium is to establish effective data management to facilitate more extensive use of the data, including for machine learning applications. The results of research in engineering will also be made more transparent and understandable. For this purpose, the team began by identifying various data archetypes, i.e. classes of research data sharing certain characteristics and specific processing challenges. Christian Stemmer, a professor at the TUM Chair of Aerodynamics and Fluid Mechanics, is a member of the steering committee and the spokesman of the subsection "High-performance Measurement and Computation". This archetype is concerned with very large datasets. These are either generated on supercomputers or measured in high-resolution experiments and can only be processed in large computing facilities. In the coming years Prof. Stemmer and his team want to study how researchers can gain access to these often very large datasets, among other challenges. One goal is to create tools that can retrieve results without bringing the data centers where they are stored to a standstill. Another working area for the scientists is defining understandable metadata as a basis for finding and reusing data records.

Technical University of Munich

Corporate Communications Center

- Paul Hellmich

- paul.hellmich@tum.de

- presse@tum.de

- Teamwebsite