Post-quantum cryptography

Quantum-safe data encryption

We happily surf the internet, disclose our credit card details to online retailers and use online banking without a second thought. We assume that our data are transmitted in encrypted form and are therefore safe.

But what will happen if the time comes when the encryption technologies now in use are no longer safe? At present, data encryption methods are based on the idea that the factorization of large numbers is a difficult task. But that will change when quantum computers are powerful enough to solve mathematical problems of this kind.

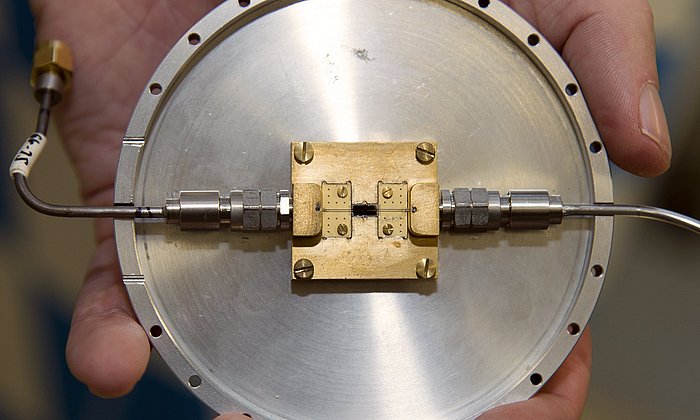

Encryption processes are used in practically every electronic device. Many such devices have very long operational lifetimes. They can be updated, if at all, only with great difficulty. Satellite communications are just one example.

Competition for new encryption technology

The National Institute of Standards and Technology (NIST) in the USA announced a competition in 2016, calling on developers to submit proposals for new, quantum-resistant encryption standards. The proposed algorithms are expected to resist cyber attacks launched from quantum computers. The NIST has made them publicly accessible to be attacked in order to test their security levels. NIST standards are generally adopted by companies and online services because they are regarded as highly secure.

Of the 69 first-round submissions, 26 made it to the second round and seven reached the final. Shortly before the NIST planned to announce winners, however, four of the finalists came under heavy attack. One of the algorithms actually had to be withdrawn after being defeated within two days by a standard laptop. The vulnerabilities of the remaining candidates were adapted sufficiently for them to remain in the competition.

Despite the many submissions, very few algorithms made it through the knock-out rounds. This shows the importance of standardizing processes that are based on different mathematical problems. This will make it possible to substitute encryption methods if vulnerabilities are identified later.

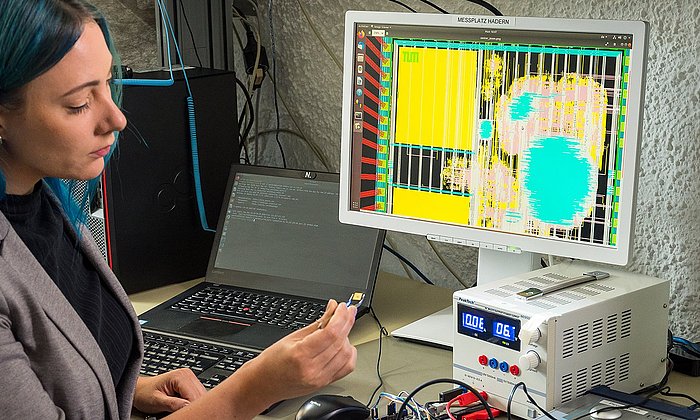

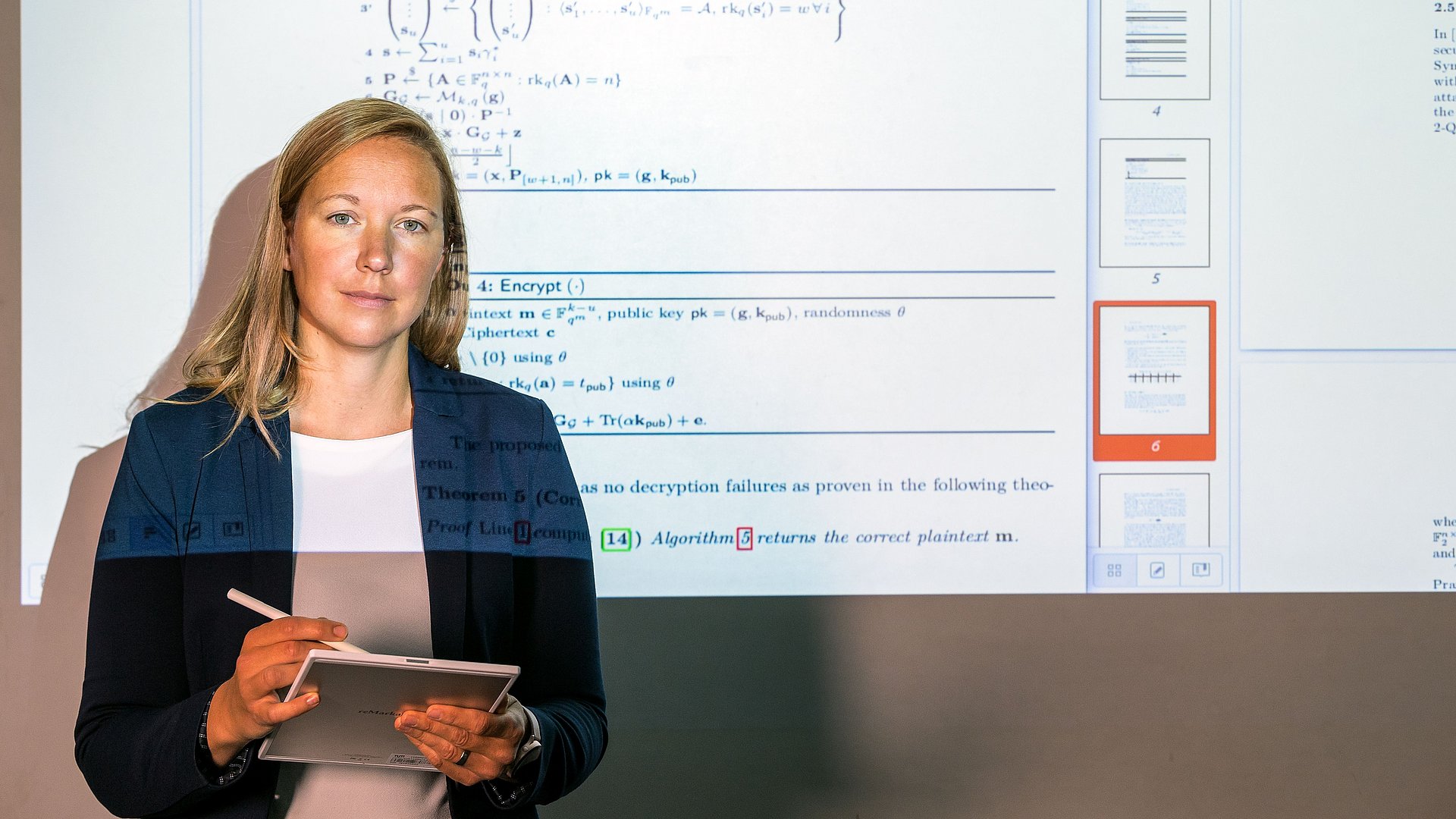

In the spring of this year the NIST called for submissions of further algorithms. Antonia Wachter-Zeh, a Professor of Coding and Cryptography at TUM, has worked with her team and another research group at TUM and researchers from Universita Politecnica delle Marche in Italy to develop two algorithms based on digital signature schemes. Digital signatures are like an electronic “fingerprint” that ensures that data originate with the expected sender and have not been changed en route.

“There is an urgent need to explore new encryption procedures if we still want our data to be secure a few years from now. We also want to make sure that people cannot decrypt the information that we are sending today,” says Wachter-Zeh.

Built-in errors ensure secure encryption

The algorithms submitted by Prof. Wachter-Zeh are based on error-correcting codes. These apply the underlying principle that errors constantly occur in the transmission and storage of data and in mobile communication networks. In binary systems, for example, a 0 might be flipped to a 1 when transmitting a string. In error-correcting codes, redundant information is inserted before transmission to allow correction in case of transmission errors. This makes it possible to compensate for a certain number of errors in the data.

Prof. Wachter-Zeh uses the principle of error-correcting codes to encrypt data by deliberately inserting errors before transmission. These are later corrected during decoding. In this way the researcher ensures that the information is protected against unauthorized access but can still be correctly encrypted and stored.

For the NIST competition the research group decided to submit one system based on error-correcting codes in the Lee metric and another that uses restrictive errors in the Hamming metric. The classical Hamming distance indicates the number of positions in which the source code differs from the encrypted code. By contrast, the restrictive Hamming distance allows errors to be assumed only for specific values. It is possible for a significantly greater number of places to be incorrect. The Lee metric also assigns a weighting to the variation in these places.

“The CROSS signature procedure, which uses restrictive errors, is highly competitive and has good chances to be considered as a new encryption standard. In our second algorithm, FuLeeca, based on the Lee metric, vulnerabilities have already been identified. The principle is generally promising, however, although quite new. Consequently, there is still a lot of research to be done,” says Antonia Wachter-Zeh.

In cryptography two different data encryption methods are used: symmetric and asymmetric encryption. Asymmetric systems are based on a private and a public key and are known as public key cryptography. Each communicating party has a pair of keys, with a private and a public key. The public key is accessible to anyone. The private key is known only to the party who holds both keys. The public key can be used to encrypt data before transmission to the holder of the private key. The receiver of the message uses the private key to decrypt the data.

In symmetric encryption, both parties have the same key, which must be exchanged secretly. To share this key, however, public key cryptography is also used. The technique of using an asymmetric encryption process to send the key for a symmetric process is called a key encapsulation mechanism (KEM).

Symmetric encryption schemes are assumed to be adaptable with relative ease and is therefore regarded as quantum-safe. For this reason, the NIST competition is focusing on asymmetric cryptographic systems and in particular on digital signatures and key encapsulation mechanisms.

- The NIST announces competitions on various topics on a regular basis in order to ensure IT security in the face of anticipated problems. The competitions always run for several years, have multiple rounds and operate on an elimination basis.

- Three criteria will be assessed for proposals in the current NIST competition: security, performance and implementation characteristics. The codes must be submitted in the C programming language and the source code must be publicly accessible.

- Prof. Wachter-Zeh is also currently involved in the German-French DFG-ANR project CROWD on new code classes in cryptography, in which she is the initiator and German coordinator, and the EiC Pathfinder Challenges project DiDaX on DNA-based digital data storage.

- Her research has been funded, among other sources, under the DFG Emmy Noether Program , the ongoing ERC project inCREASE (“Coding for Security and DNA Storage”) and the BMBF project 6G-life. Along with many other awards and honors, she received the Heinz Maier-Leibnitz Prize in 2018 and the NVMW Memorable Paper Award in 2019.

Technical University of Munich

Corporate Communications Center

- Julia Rinner

- julia.rinner@tum.de

- presse@tum.de

- Teamwebsite

Contacts to this article:

Prof. Antonia Wachter-Zeh

Professorship of Coding and Cryptography

antonia.wachter-zeh@tum.de

Phone: +49 89 289 – 23495